Reinforcement learning

ME 343; March 13th, 2019

Prof. Eric Darve

YouTube will not let you play this video from this web site. Follow the link Watch this video on YouTube on the error page to see the video.

Recap on reinforcement learning

-

Case 1: a model for the environment is known.

-

Bellman equation can be used to calculate various quantities like the value function

v(s)=E[Rt∣St=s]+γs′∑Pss′v(s′)

Control! Optimal value function

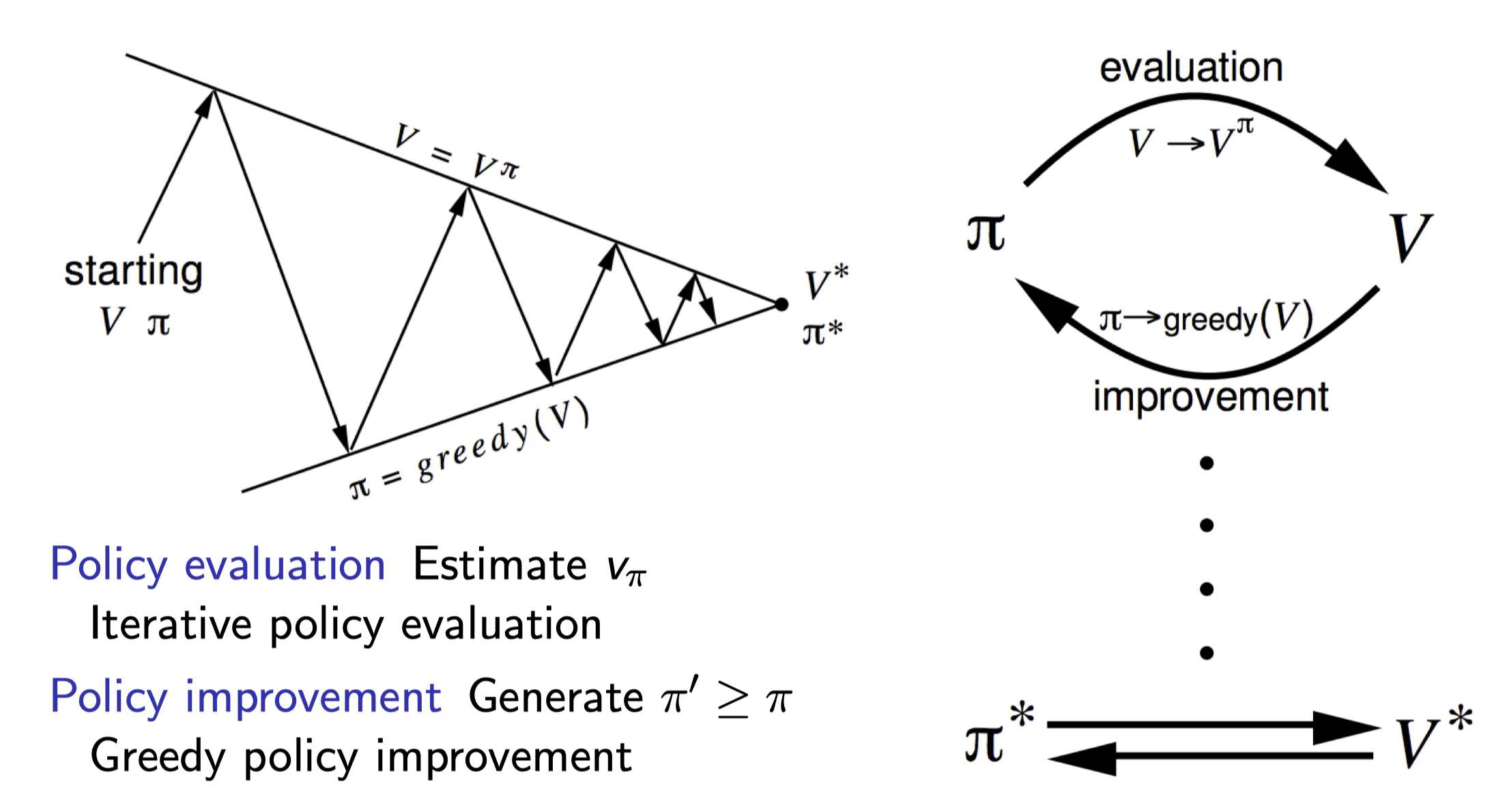

Iterate:

- Policy evaluation: update value function based on policy

vk+1(s)=a∑πk(a∣s)(Rsa+γs′∑Pss′avk(s′)) - Policy improvement using greedy approach:

argmaxa[Rsa+γs′∑Pss′avk+1(s′)]

Case 2: model-free learning

- For cases where the environment is too large to be completely evaluated or simply unknown

- Learn based on Monte-Carlo sampling

Full episodes

V(St)←V(St)+α(Gt−V(St))

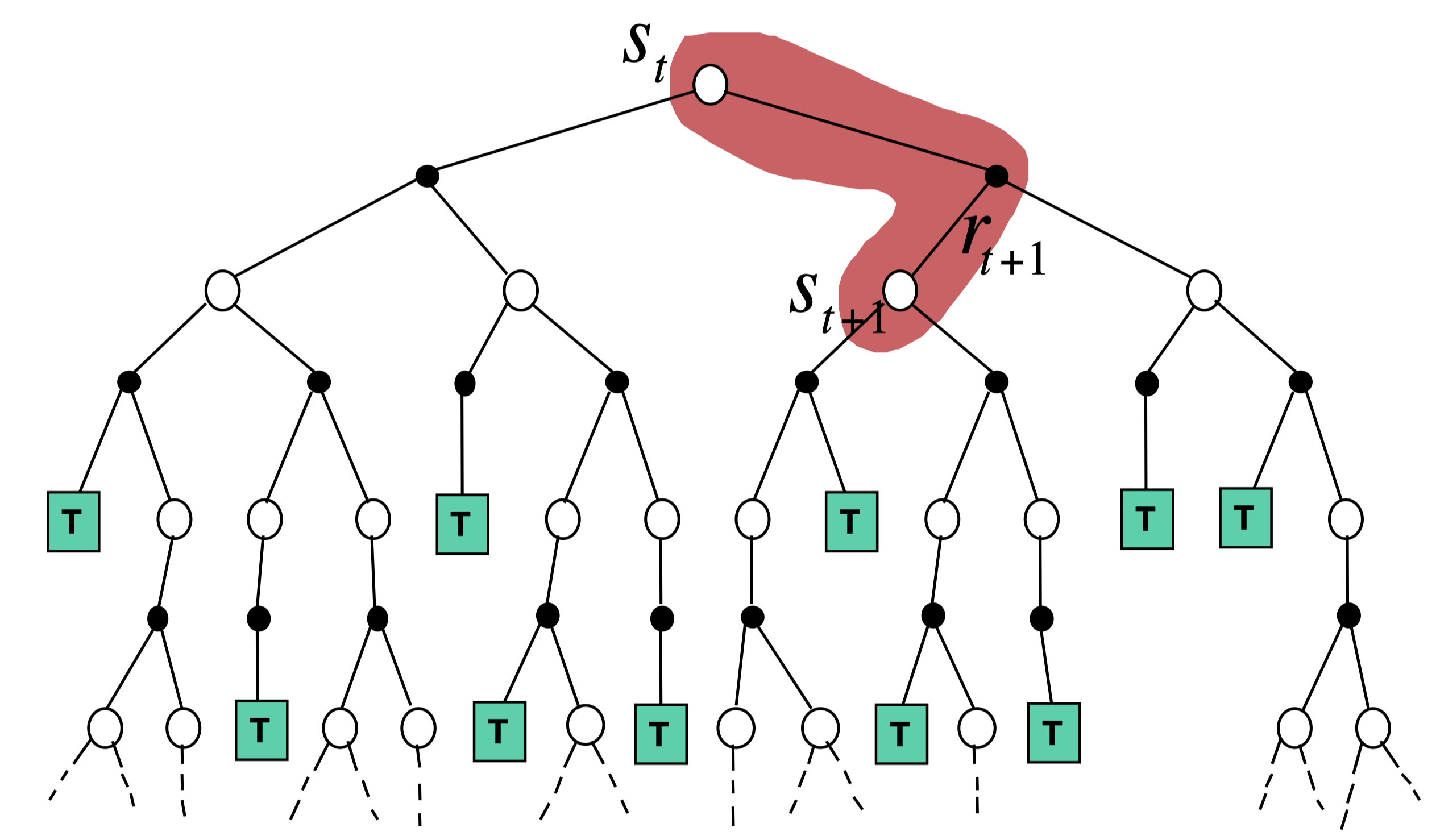

Temporal-difference backup

V(St)←V(St)+α(Rt+1+γV(St+1)−V(St))

Policy gradient

- Policy function may be approximated using a neural network

- This allows using gradient methods to improve the policy

π(a∣s,θ)=P(At=a∣St=s,θt=θ)

θ: parameterization of π.

- Example

π(a∣s,θ)=∑aeϕ(s,a)⊤θeϕ(s,a)⊤θ - ϕ(s,a): vector of features; (ϕ1(s,a),…,ϕk(s,a)).

Policy gradient theorem (see notes)

Gradient used to update weights θ:

∇θJ(θ)∝Eπ[Gt∇θlnπ(At∣St,θ)]

- Gt: discounted reward (MC sampling)

- π(At∣St,θ): parameterized policy

- There is a coefficient of proportionality but this is not important for gradient algorithms

REINFORCE algorithm

Algorithm:

Repeat until convergence:

Generate an episode S0, A0, R1,...,RT

using policy pi(theta)

For each step t of this episode:

G <- return at step t

Update theta using the eq. REINFORCE

θ←θ+αγtGt∇θlnπ(At∣St,θ)(REINFORCE)

AlphaGo!

- AlphaGo: computer program that defeated a Go world champion. Arguably the strongest Go player in history.

- Invented highly innovated Go moves.

- Let's play go!

Ingredients

Uses a combination of several of the methods we have seen:

- Neural network to approximate policy and value functions

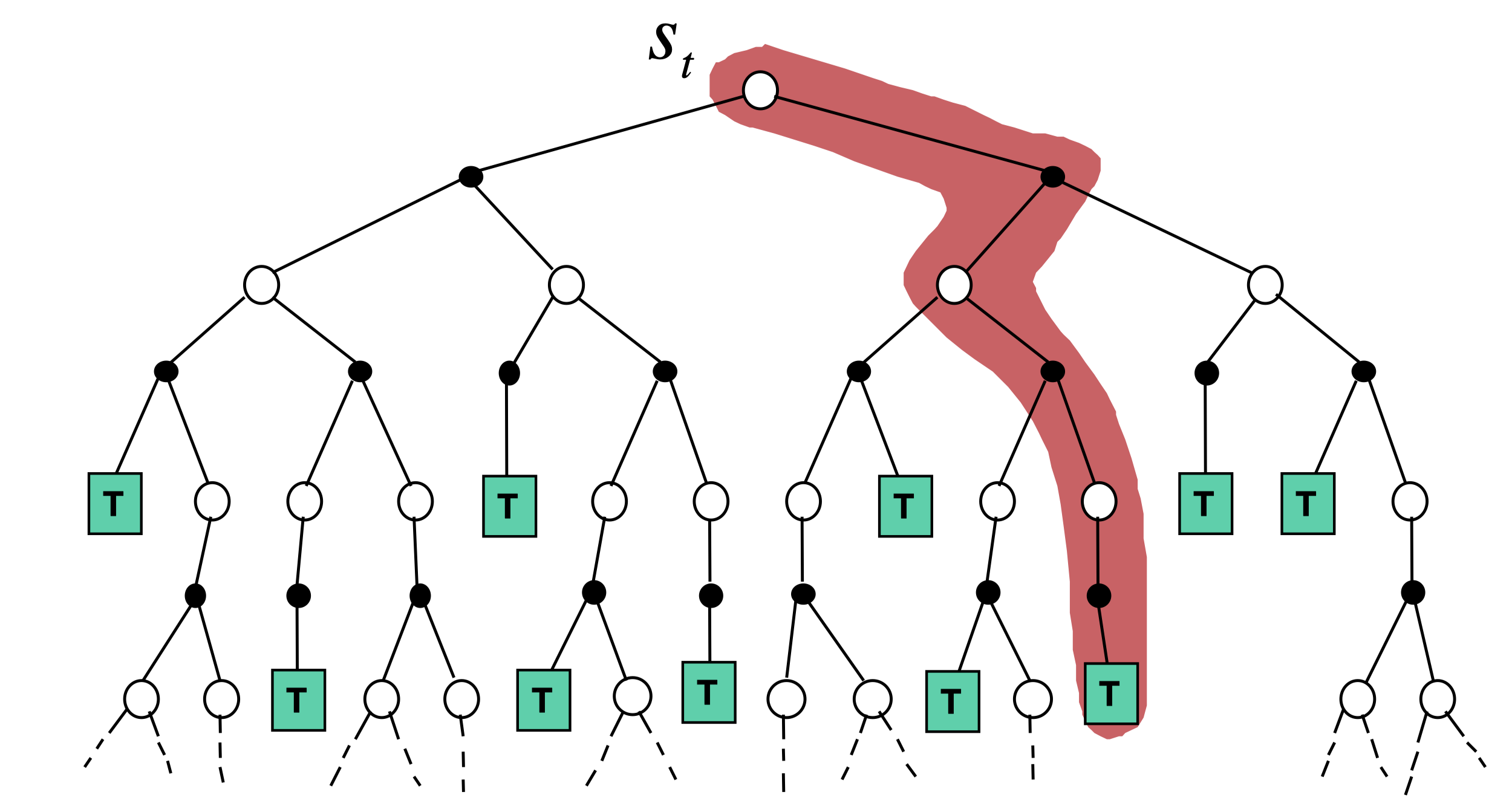

- Monte-Carlo tree search with temporal difference

- Policy gradient theorem to improve policy

- UCT (multi-armed bandit) strategy to select moves

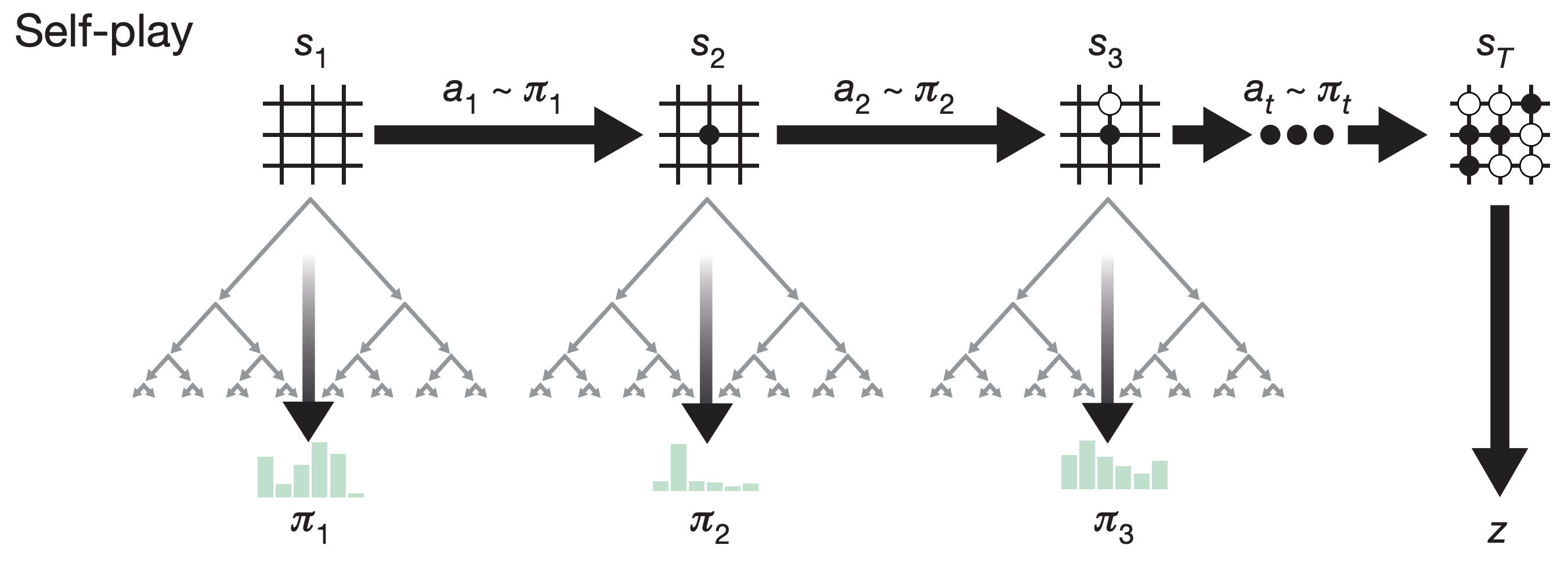

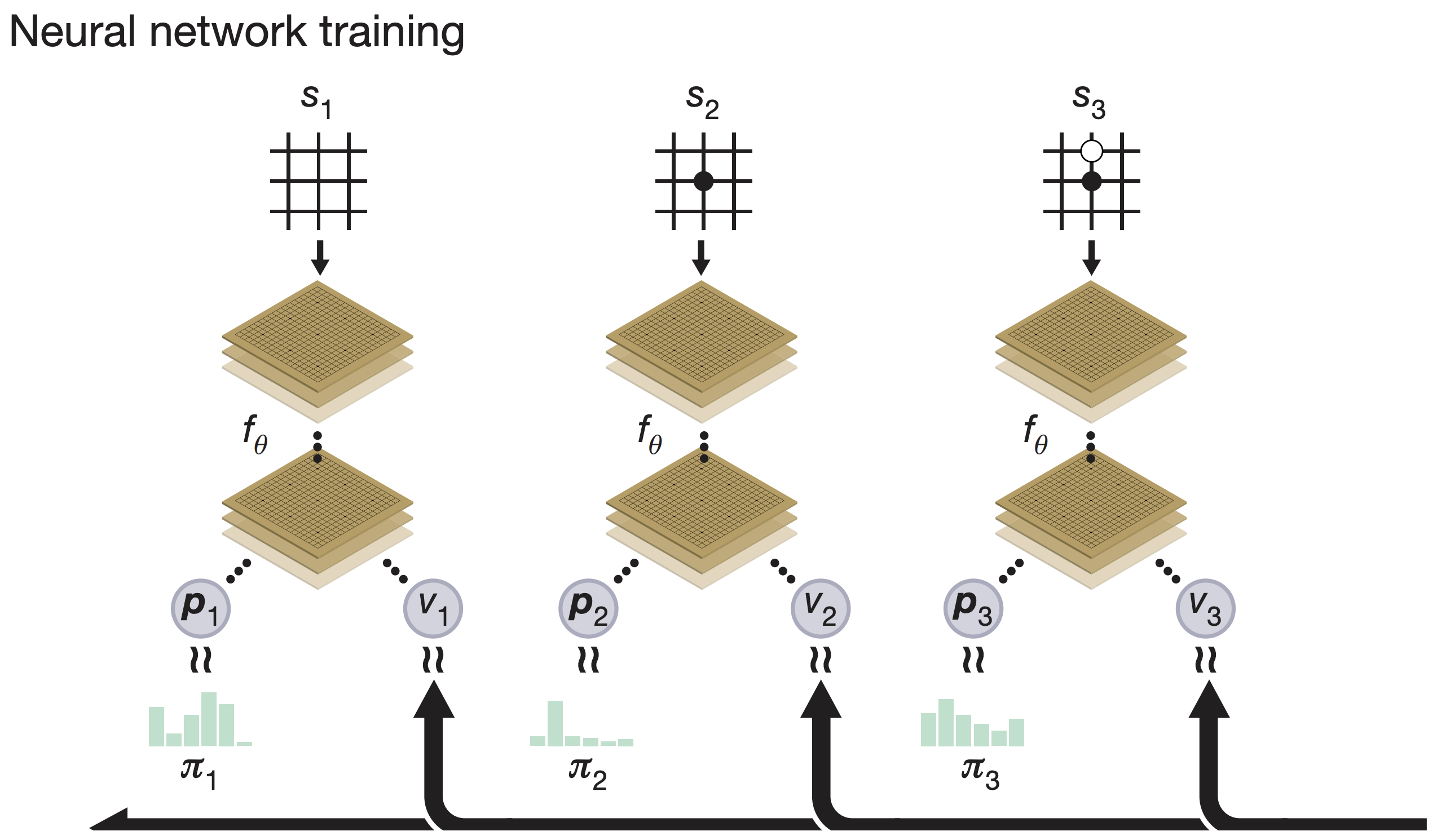

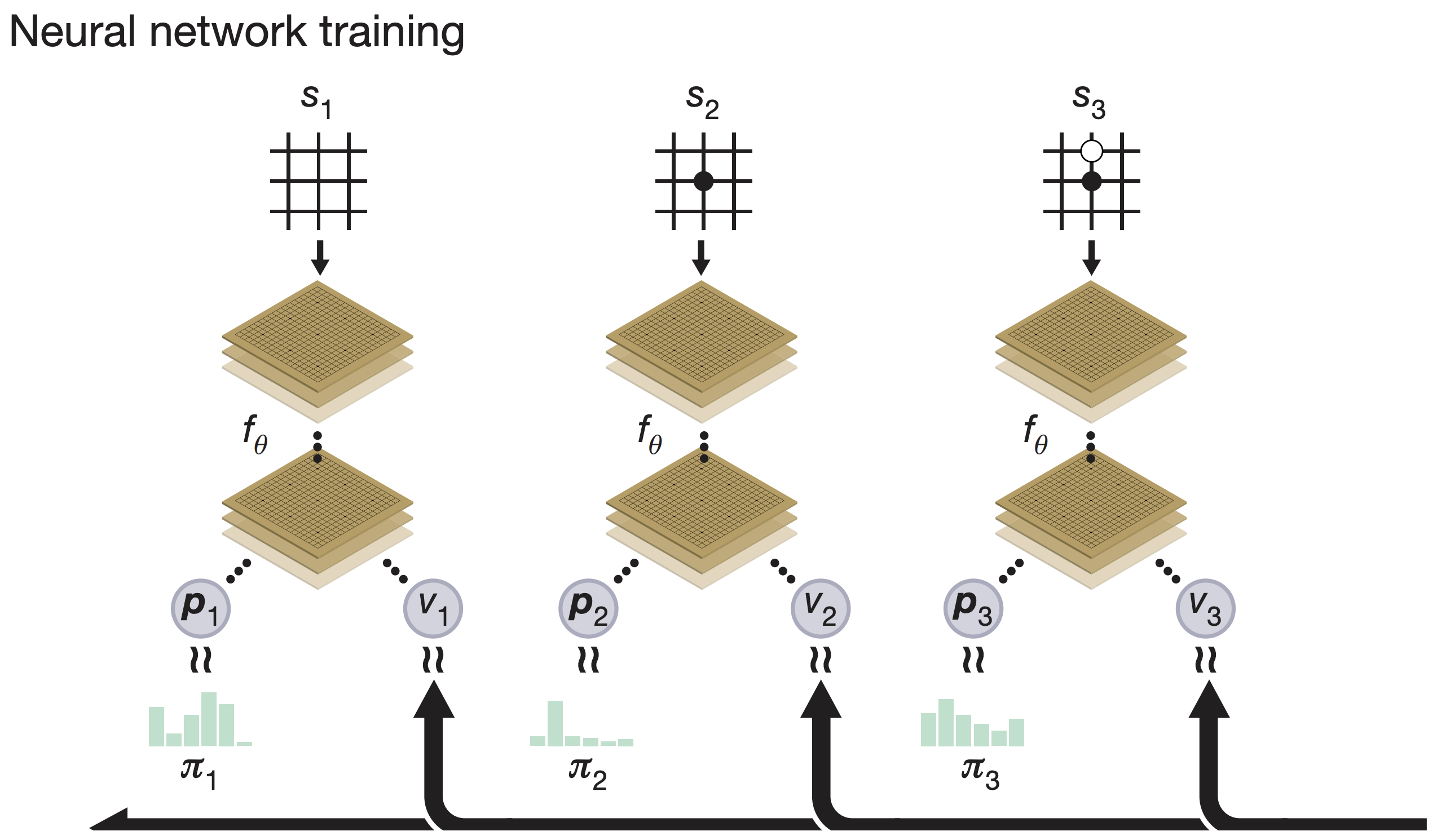

Neural networks: self-play

- Program plays a game s1, …, sT against itself.

- An MCTS is executed using the latest neural network fθ.

- Moves are selected according to the search probabilities computed by the MCTS, πt.

Neural networks: policy/value functions

Neural networks: policy/value functions

Neural network parameters θ are updated to:

- maximize the similarity of the policy pt to the search probabilities πt, and to

- minimize the error between the predicted winner vt and the game winner z.

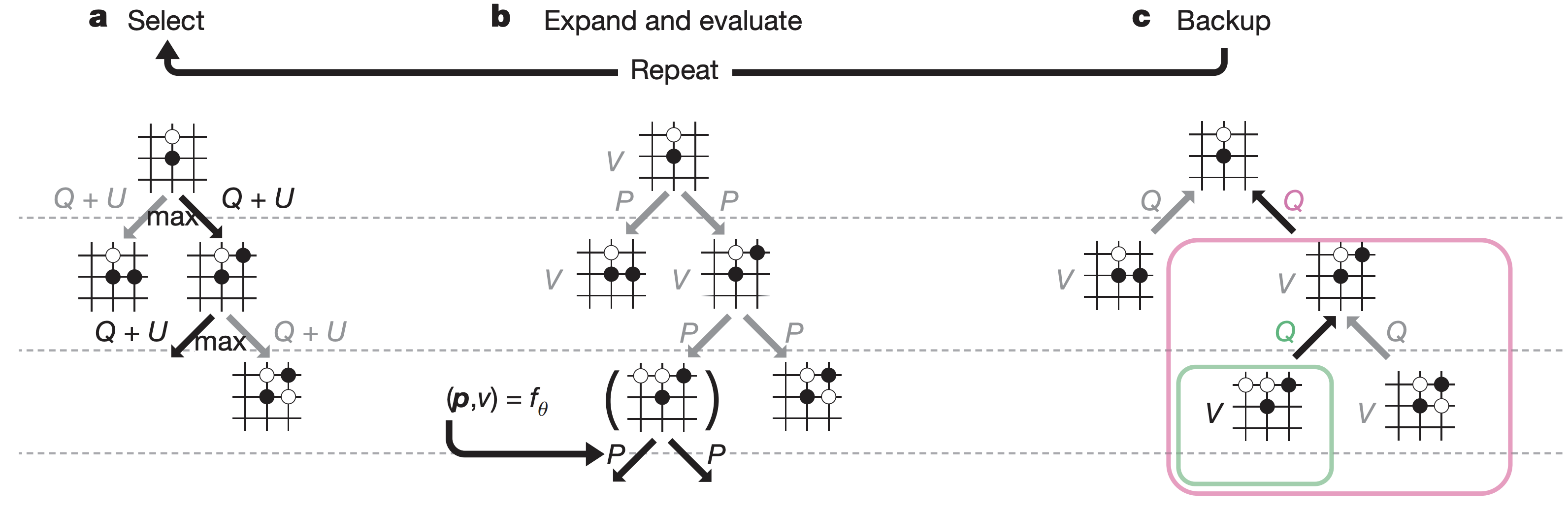

Example of MCTS

Policy improvement

Use the policy gradient theorem!

Δθ∝zt∇θlnπθ(At∣St)

UCT

Variant of PUCT algorithm was used:

at=argmaxQ(st,a)+U(st,a)U(s,a)=CP(s,a)1+N(s,a)∑bN(s,b)

- Added P(s,a) to bias towards winning moves

- Abandoned log in favor of . This leads to more exploration.

Training time graph

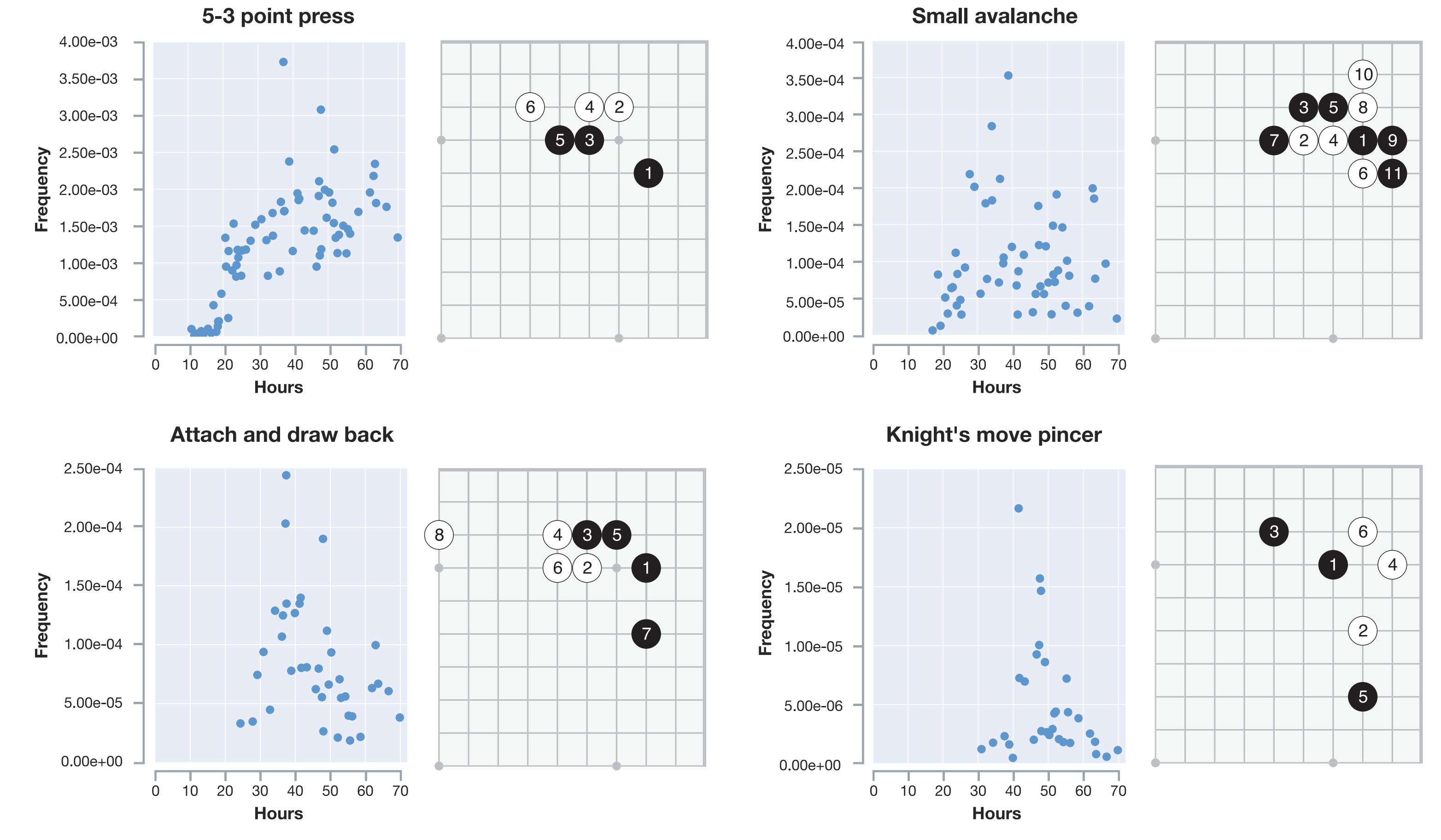

Joseki

- Joseki: corner sequences common in professional Go

- Noew slide: frequency of occurrence over time during training for different joseki

- Ultimately, AlphaGo preferred new joseki variants that were previously unknown!

Training time is shown on the x-axis.

Strategies learned by AlphaGo

Try out AlphaGo

You pick select moves and see how the game evolves from there.

GitHub codes

https://github.com/suragnair/alpha-zero-general